Envision life

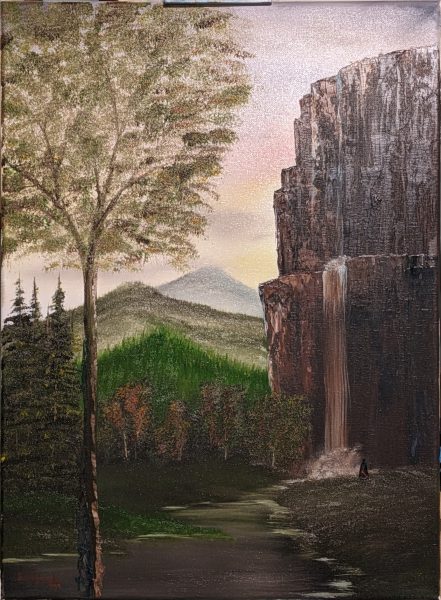

She is the noble lady Of the emerald vesture Who calls to me in dreams. Through misted moonlight I have seen The path that leads To her glen and loch. By light of dusk On shadowed hills We have there rejoined Through glimpsing eyes, Then turned to separate paths. Now where is Ainé, Keeper of my visions? The dreamer of Glendalough, The noble lady Miadh Ríoghan. My friend.

© 2001 John Schneider. All rights reserved.

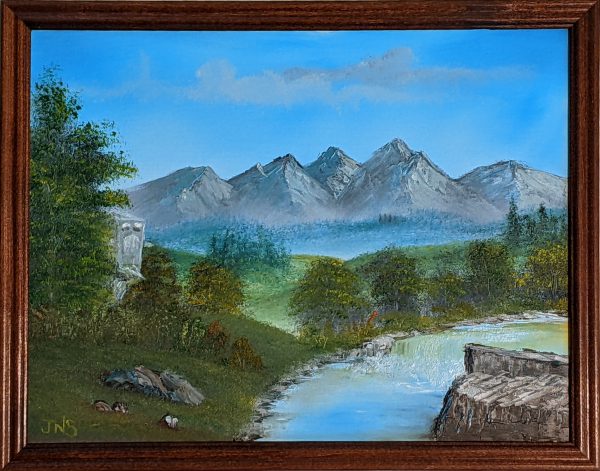

Sun beats down. Bright light; slights night. Depart the wilderness; desert waste. Return to the valley; forest grove. Then let the rains fall and cool the scorched soul.

© 2000 John Nelson Schneider. All rights reserved.

© 2023 John Schneider. All rights reserved.

© 2023 John Schneider. All rights reserved.

© 2023 John Schneider. All rights reserved.

© 2023 John Schneider. All rights reserved.

© 2023 John Schneider. All rights reserved.

© 2023 John Schneider. All rights reserved.